Can’t see the forest for the trees

Thought

“We don’t have runway left,” said his co-pilot HS Ahluwalia – the last thing recorded in the cockpit. The plane then plunged into a forested gorge and was engulfed in flames. Black box only helped unravel the truth that captain ignored co-pilot's plea to abort landing which led to this unfortunate event.

This was nudging me for quite some time and I was thinking when we testers test all the time (and lot of which is of type ‘black box’) and ironically, we don’t have a ‘black box’ present in most of our IT applications. While testing complex systems, teams end up spending a good amount of time figuring out what’s happening under the hood in order to determine the application’s actual behaviour & this is especially true when a production issue pops up and it takes a lot of time for support & test to not only repro the issue but also to find out what would have happened behind the scenes to quickly find out the root cause so that the fix can be provided (if required) while customers can’t stop biting their nails waiting to hear from IT.

And then while researching on the topic I stumbled upon this:

“Think of it as a flight data recorder, so that any time there's a problem, that 'black box' is there helping us work together and diagnose what's going on," Bill Gates said during a speech at the Windows Hardware Engineering Conference. I understand Bill was saying this from Windows point of view but isn’t this if not more but at least equally applicable to IT apps where we want to reduce the development and support cost? Shouldn’t we have a “black box” too to enable our testers do “black box” testing in lesser time?

This paper will be targeting testability for machines (by ensuring automation tools can validate the implementation of feature with reliability & ease) and humans (customers, users, testers and developers) at system level in the given test context.

|

“Situation is very similar to that of a man who doesn’t even know that he is suffering from some serious disease while instead of going for check-up he blames other factors for his weakness and such symptoms “

For “poor testability” in many scenarios acts as a slow poison (& not always comes as a sudden death), it is often hard to detect the same & testers, who might be ignorant of the fact, can attribute it to the wrong problems and will keep trying to address their pain points by working out solutions such as stretching extra hours, hiring more resources, expensive automation, risk based testing, need for better estimation and planning etc. without any real long term success. This vicious cycle keep haunting them release over release.

The point is that lot of teams don’t even realize testability is their pain point and hence it doesn’t get the attention it truly deserves.

And for the testers who do realize testability is their culprit, they know better that it is difficult to fix testability, once the damage is already done (for legacy apps) & its keeps getting procrastinated forever as cleansing code is NOT fancy, fashionable & most importantly it’s not cheap. Even when our development team acts like Good Samaritans & come to tester’s rescue to offer refactoring/redesigning to improve the testability (lot of good stuff has been written by testability gurus like Misko), but interestingly & surprisingly, the test teams & customers might not necessarily always agree for the obvious reasons as it will can be risky to make changes in a functional product & running the risk of introducing any regression issues which can’t be adequately tested due to lack of time & they might settle to live with bad testability.

Testability if not tackled right from design phase of the application then it can be a major problem to handle them in future releases and this paper focuses on improving testability for not only V1 project but also for legacy applications where testability can still be improved by proposing solutions other than design changes.

Scope: Testability @ System Layer (Black Box)

In real world, testing is nothing but attempting to USE the product and sincerely hoping it to work and not being able to determine the behaviour reliably and getting stuck (I call it ‘unintentional discoverability of poor testability’). Now testers are customer’s best friends (or so we want them to be), who get paid to put themselves in end-user’s shoes to test the product from their point of view & hence testability is quite important at this layer because this is how the product is going to be used in real world. The challenge DFT (Design for Test) is facing today is the need to look beyond component level & focus on system requirements, & the life cycle maintenance support. This brings in the need to ensure testability at system level despite of the fact that testability is easier to perform at granular level for controllability and observability.

A products user interface is one of the most challenging areas to increase testability. It’s also the place where our customers will be using the most. It’s important to make your user interface code as testable as possible.

Testability is more important than ever at functional layer because of the correlation that exists between Testability and other crucial factors like usability, accessibility, security and discoverability.

As usual, there's no silver bullet; some approaches may be better, but there's always some limitation that blocks from adopting in all possible contexts. There is lot of great stuff written on the use of DFT, TDD, Mock Objects, Emulation Layers and Test Plug-ins for improving testability but for the scope of this discussion we are not covering those.

Solutions

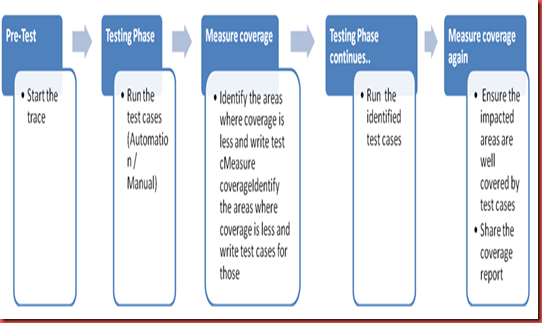

To improve automated testability, we will talk about few solutions including building a ‘black box’, merging test and development code and intelligent selection of test data

Developing ‘Black box’

‘Black Box’ can be developed using Test Hooks. Test hooks are quite different from Mock Objects, however. Hooks are significant anytime you need to find the details of any process

“Good test hooks are one of those "I'll know it when I see it" things. Basically, if it gives you the data you need when you need it, it’s good”

There can be two kinds of applications where ‘black box’ can be very useful especially for (a):

a) Applications with Thick UI

If you system’s business logic is separated from UI you can directly hit the web services / API to test the logic with the help of custom harness BUT if not then you need a way to tunnel through the UI and other layers to reach the business logic. That’s when testability at system layer (UI) sometime become extremely important for applications having business logic present inside the UI layer as well (though which is not a good practice but for legacy apps it might be true.

b) Applications with Thin UI

A test hook can be useful here even if you are calling the APIs directly; for ex., you can find out the specific intermediate results.

Approaches:

While developing black box to increase the visibility, you need to focus on “Observability” and “Controllability” components of the classic SOCK model from ‘black box’ point of view. Observability here is watching system behaviour and reaction for each input given to the application & Controllability is primarily your ability to control the state and execution path of your application.

Adding (optional) detailed logging using Test Hooks

Turning on/off option for detailed logging will help in observability:

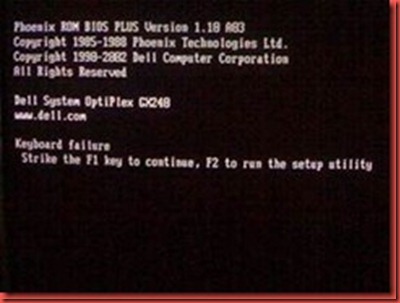

i) Validating business logic when there are lot of intermediate steps involved and the testers want to validate the logic at each step then this can be instrumental in going and tracking it exactly as it happens.

ii) Visualization of application execution flow (behind-the-scene-action) and understanding what’s exactly happening under the hood and when (e.g. timestamps). This will avoid the need to make guesses and investigating various layer one by one to find out the real culprit

iii) This can also be used as a kind of self-documentation of the features where someone new in the team can understand the application data and control flow by just looking at these elaborate logs.

Optional parameters to override default settings

This can improve controllability when let’s say tester want to change the default timeout by overriding it so that the test can be run faster. These additional parameters will empower our tester to control the state of the application which can be used to easily test the application.

Adding “Self-Testable” features

These can be the methods explicitly written and added to the code and they will be publically exposed and documented as features so that testers/support or even users can run these to diagnose the behaviour of basic yet critical functionalities.

| “Think of these as like Hard Disk Failure Test which can be run by the user to troubleshoot the problem without the need to engage the support professionals to do preliminary investigation.” |

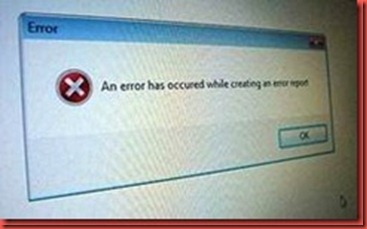

The hardest part of designing a black box might be whether to leave it in the production build or not. Likewise, allowing your customers to turn on debug logging and send you the results will save time for everyone. Many support teams and advanced business users are perfectly willing to do this, as long as it's under their control and they can see what they're sending you. They can act as self-testable features that will be shipped with the software for easier FD/FI (Fault Detection/Fault Isolation). If you do leave your black box in, treat them as the feature they are.

Providing “Alternate” execution paths

a) It is the ability to perform same operation by using multiple options so that when say main option is not working, it doesn’t become a showstopper and the same can be tested using alternative option. For example: a keyboard shortcut might come in handy when the UI control is not working.

b) Lot of times testing an operation might be tough say from UI whereas same could be easily done from Command line and hence consciously making sure that there are multiple means to achieve the same results go a long way to help testers test easily.

One Code: Merge test automation and development code

“After all code is just a piece of code and it knows no boundary. “

Too many times the silos of development and testing are not intersecting until it's 'too late'. Consider having test automation code and development code as part of the same Visual Studio solution.

I'm a huge advocate of merging test and development code for a variety of reasons.

1. Automation ‘Rat Race’ Challenge: Situation arises when automation test code constantly tries to catch up with the new changes made to the code due to requirement changes / bug fixes, which mostly breaks the automation and causes rework and maintenance effort.

Now with refactoring the changes in the UI can be easily propagated to test solution even before the test script runs, fails and discover it.

2. Whenever the code is changed, the impacted test code can be seen and identified for any potential issues with dependencies created between them. With this the regression can be easily identified whenever the code change is made and testing team can run the impacted tests alone.

3. We get maximal code reuse, our test utilities can use the exact same code to create and modify data, and it promotes ownership between test and development in both directions. This way quality can be pushed upstream when we have a solid automation infrastructure which can be shared by both developer and tester to improve the overall product quality.

4. ‘One Click Automation’ can be achieved where every development build (Daily or Weekly) can seamlessly trigger automation (unit/functional) using VS 2010 features.

Like many things, this is about people working together and if you have a good level of DEV/QA cohesion, it goes A LONG way.

Intelligent Test Data can improve testability as well

One of the important aspects of testability is to achieve complete code path coverage and simulate situations in a cost efficient manner. The monster that would need to be addressed when there are infinite permutations and combinations and one can’t be certain about the testability as exhaustive testing is impractical and random testing is only risky. We wrote a Think Week paper last year to cover this topic in depth “Intelligent Selection of test data by applying learning from Marketing Research There we have proposed strategies to choose the test inputs /data in such a way that it can help you achieve high coverage with minimum testing effort.

Benefits

![]() Overall testing efforts can be reduced when features become easier to test.

Overall testing efforts can be reduced when features become easier to test.

![]() The ‘black box’ and ‘merging test and development code’ approach can make our tests simpler and automation much more robust.

The ‘black box’ and ‘merging test and development code’ approach can make our tests simpler and automation much more robust.

![]() ‘Black box’ can become supportability features for support professional and advanced users (easier Fault Detection & Fault Isolation)

‘Black box’ can become supportability features for support professional and advanced users (easier Fault Detection & Fault Isolation)

IT support cost and effort can be reduced by empowering support professionals by providing them ‘black box’ which can be used to diagnose issues with lesser dependency on IT Engineering team (Ticket Reduction)

Conclusion

In this paper I have made an attempt to highlight testability as a major challenge which needs to be given its due importance to help reduce overall testing cost/effort and also to improve customer UX (user experience) by making testing/using the product simpler.

Implementing changes suggested in the paper would no doubt take some substantial effort (varying from project to project depending upon the complexity of the apps) but for legacy apps, it can be done in an incremental manner whereas for new apps it can be built into the code from day 1 as a best practice.

References

http://news.cnet.com/8301-13860_3-10052412-56.html#ixzz10S1RPf00

http://news.cnet.com/Microsoft%20to%20add%20black%20box%20to%20Windows/2100-1016_3-5684051.html#ixzz10nq2SAIl

http://blogs.msdn.com/b/micahel/archive/2004/08/04/207997.aspx

http://blogs.msdn.com/b/dustin_andrews/archive/2008/01/11/driving-testability-from-the-ui-down.aspx

Biography

| Raj is a Test Consultant specializing in different types of testing techniques, test automation and testability in different domains like Manufacturing, Healthcare and Higher Education. He holds an APICS certification in Supply Chain Management. Expertise with Rational and Mercury testing tools, he has helped teams develop test automation strategies and architectures for such companies as Cognizant Technology Solutions and Oracle Corporation. He also provides training in automated testing architectures and design. He is QAI (CSTE) & ISTQB Certified. He has a master's degree in Computer Applications. He is currently working at Microsoft, India, Business Intelligence COE. He has earlier represented Microsoft and Oracle at International test conferences as a Speaker. His passion @ http://www.itest.co.nr |

![clip_image002[4] clip_image002[4]](http://lh3.ggpht.com/_IWEJ_QTVH1c/TE3TzKXN3VI/AAAAAAAAGQ4/5XVRgFZ3tD0/clip_image002%5B4%5D%5B3%5D.jpg?imgmax=800)

![clip_image004[4] clip_image004[4]](http://lh4.ggpht.com/_IWEJ_QTVH1c/TE3T2X4FbKI/AAAAAAAAGRI/vwWx0ZGcdpQ/clip_image004%5B4%5D%5B3%5D.jpg?imgmax=800)

![clip_image006[4] clip_image006[4]](http://lh3.ggpht.com/_IWEJ_QTVH1c/TE3T59qm4SI/AAAAAAAAGRY/-hnRIchC-DU/clip_image006%5B4%5D%5B3%5D.jpg?imgmax=800)

![clip_image008[4] clip_image008[4]](http://lh4.ggpht.com/_IWEJ_QTVH1c/TE3T855fQtI/AAAAAAAAGRo/C-hgHEBkSGc/clip_image008%5B4%5D%5B3%5D.jpg?imgmax=800)